If we want social capital markets to fund the social sector effectively, we need to use social impact data effectively when making investment decisions. And investment decision-making almost always requires that we compare social impact data across locations, programs, or organizations. This is difficult, because contexts, missions, definitions, measurement approaches, and values differ. It’s always apples to oranges, and this “comparison problem” not only affects good decision-making, but also our ability to report on impact at the investment portfolio level.

Accepted wisdom is that we can solve the comparison problem with better impact measures (methods, definitions, and standards). This works on a small scale; many grantmaking foundations and impact-investing firms solve their comparison problems by mandating common measures across their portfolios. But on a larger scale—when initiatives and enterprises differ in mission; theory of change; or socio-economic, cultural or geographic context—common measures don’t work as well. Those closest to the impact sound a familiar refrain: Common measures ask the wrong questions, measure the wrong things, and miss the real impact. Context affects how we ought to measure impact. The definition of “job,” for example, might specify a living wage and full-time hours in some contexts, but allow entrepreneurial self-employment in others. The more contexts vary, the more likely it is that a rigid approach displaces a more insightful one. In other words, the more we rely on common measures to solve the comparison problem, the more we end up compromising the meaningfulness of social impact measures themselves. This is why measurement alone cannot solve the comparison problem.

We can, however, achieve comparability by focusing on the analytical skills needed to compare social impacts without mandating a rigid set of required metrics. The premise is that efficient capital markets demand analysts who are capable of interpreting and comparing apples and oranges. Why? Because they understand fruit. The market is best served when each organization can measure its social impact in the way that is most meaningful and insightful to its aim and operations, as long as it follows common principles for good measurement. Drawing insights from financial accounting, good analysts focus on measures that are flexible and adaptable to different contexts (within limits), applied consistently (organizations pick an approach and stick to it), and well disclosed (bring on the fine print!). We achieve comparability not at the moment of measurement, but after the analysts adjust, aggregate, and interpret the measures that get reported.

It’s better to manage variation than to eliminate it.

Are you enjoying this article? Read more like this, plus SSIR's full archive of content, when you subscribe.

The most die-hard proponents of standardized social impact measures argue: “Sure, we lose something when all organizations use the same measures, but what we lose is so much smaller than what we gain. We all just need to commit to one method and stop wasting time on tiny details.” Financial accounting once gave that a try, and found it untenable.

In the mid-1800s, there was nothing resembling today’s financial accounting standards. Historians looking back on the accounts of early industrial companies note that it’s difficult to compare the profitability of companies in the same industry, or even the same company over a short series of years. Similar organizations chose different things to measure, different ways of counting, and different ways of valuing. When the Mercantile Laws Commission in 1854 explored the feasibility of standard financial disclosure, businesses were opposed. One businessman, Lord Curriehill, described “the impracticality of ascertaining correctly, at any time, the amount or even the existence of profits.” Like current social impact measures, there was too much variability for disclosures to be comparable.

Years later, in the midst of a railway boom, businessmen believed that variation should be eliminated by creating uniform accounting practices1. A New York Times letter to the editor in 1894 captured the widespread sentiment: “One general sentiment prevails, and that is that sound business principles would be best conserved if each railway company in the country was compelled by law to observe a uniform method in rendering its periodical statements of earnings and expenses.” This is the essence of the argument made by advocates of standardized social impact measures: Social capital markets are best served by a single, common method for measuring social impact.

Governments created and mandated “uniform accounts” for railway companies in the United States, England, and Canada, but they didn’t live up to their promise. Small differences in definitions, timing, and methods created subtle variations that significantly undermined comparability. Regulators found themselves defining more and more minute aspects of measurement to try to achieve true uniformity—a complex and expensive task. Most importantly, companies found that reducing measurement variation reduced measurement relevance. In 1935, George O. May of the American Institute of Certified Public Accountants wrote: “The trouble with an 'official' system of accounting is … wide variations are possible … and which variation should be adopted is a question on which one cannot rightly be dogmatic.” Variation, it turns out, is best managed, not eliminated.

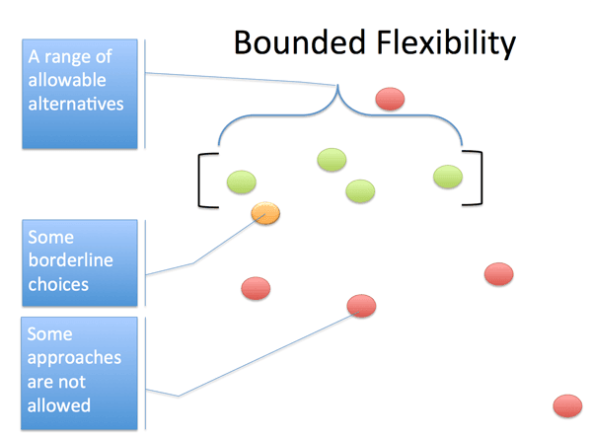

Current financial standards manage variation with what might be described as bounded flexibility, or choice within limits, coupled with consistency (pick a method and stick to it) and disclosure (fine print gives details on methods used). Different businesses with different strategies in different industries choose different methods for asset valuation, depreciation, and so on, and must disclose the methods they use. Financial statements aren’t perfectly comparable. Instead, we expect users to be aware of the different approaches and to understand how different measurement choices affect reported profit and other important numbers. Said differently, today’s financial standards achieve some degree of comparability, while allowing variation in measurement, by putting the onus on the user to recognize the significance of different accounting policies.

Bounded flexibility in measurement and reporting strikes a middle ground between “anything goes” and “one right way.”

Bounded flexibility in measurement and reporting strikes a middle ground between “anything goes” and “one right way.”

This brief history offers three bits of wisdom to those arguing for standardized social impact measures:

- When we eliminate variation, we lose something important: relevance. Relevance manifests in details such as the timing of when to measure an outcome or the definition of a term. What is at stake is not trivial; it is the meaningfulness of the measure itself.

- Uniform measures are difficult to achieve and expensive to maintain.

- We can achieve a reasonable level of comparability with bounded flexibility, consistency, and disclosure, so long as there are skilled analysts who can make sense of the differences in measurement approaches.

We need complex social impact reports and people who can read them.

Cultivating analysts who can make use of impact reports without influencing how organizations collect and measure impact data will enable the sector to share higher-quality information through social capital markets, reduce the overall reporting burden, and disentangle values and valuations.

The bounded flexibility that financial accounting embraced is already a tenet of leading approaches to measuring and reporting social results. Impact Reporting and Investing Standards (IRIS), The G8 Social Investment Task Force report “Measuring Impact,” the European Commission’s “Proposed Approaches to Social Impact Measurement,” and “The Guide to Social Return on Investment Analysis” each take a flexible approach to the selection, definition, and collection of indicators based on stakeholder interests, program area, and budget. IRIS has gone further by translating total flexibility into separate indicator options, for example, job codes PI3687 and PI2251 allow organizations to choose the “standard” indicator that is most relevant. The Global Reporting Initiative (GRI) has also moved in this direction with a materiality grid that offers organizations a structured and verifiable approach to selecting relevant measures. These approaches are on the right path.

To make the precise definitions, measures, and timings that underpin reported social impact transparent, organizations need detailed disclosures. This transparency is important to making indicator flexibility work. Social Value International (SVI), GRI, and IRIS all recommend that organizations disclose the technical details of their impact measurement, and yet details remain sparse in most reports. Many social-purpose organizations claim that no one reads their impact reports; donors, investors, and other stakeholders just want one compelling statistic and a good story. This is true except for one group of people who need and want detailed reports: analysts.

Analysts can expertly make sense of the technical details in a lengthy report and can use that expertise to compare dissimilar measures of impact. Analysts in the social impact space include organizations assessing and comparing charity impacts (such as Charity Navigator, GiveWell, Charity Intelligence), corporate environmental sustainability (Sustainalytics, Trucost), impact investments (HIP Investor, Caprock, B Lab’s B Analytics), and certain products or industries (Fairtrade International, BuyUp). Donors, investors, and consumers are migrating to these services, and in some cases paying considerably for them. There are also analysts inside foundations, government, and grantmaking charities. To do their jobs well and efficiently, analysts want and need thorough and detailed social impact reports from the organizations they review. Without such reports, they find themselves sending out surveys, interviewing organizations, and otherwise taking up time and influencing data collection and measurement.

How do social impact analysts assess different measures?

The skills of this new profession are nascent, but they are rooted in long-standing disciplines, and established communities of practice are developing and refining them for application to the emerging social capital marketplace.

First, these analysts think about social impact measurement holistically—reading across techniques, instead of just designing an evaluation or performance monitoring system for a particular program, or just applying a particular technique such as theory of change, sustainable livelihoods, or social return on investment (SROI). They have conceptual frameworks that map the boundaries of analysis (scope, domain, level, timeframe), process (stakeholder involvement, consideration of change relative to baselines, third-party verification), and report content (materiality, notions of performance), as well as composition of a measured change (observables, estimations, assumptions, calculations). These frameworks function as translating mechanisms that allow analysts to understand and compare different approaches.

Second, the analysts know how to make adjustments for different measures. Sometimes adjustments are qualitative calibrations of the reported impact (for example, noting that a report’s very conservative assumptions likely understate impact) and sometimes they are quantitative (for example, subjecting the assumptions to sensitivity and re-calculating results based on weighted probabilities, or plugging publicly reporting outputs into pre-built models to see how organizations compare under common outcome assumptions).

Third, these analysts apply a valuation to the reported measures. We can express incommensurable measurements as greater or less than the others only through reference to values. It’s at the valuation stage of analysis, for example, that the pollution generated by a large manufacturer may be reconciled with the fact the company also has good labor relations and an exemplary safety record. Analysts can do valuation by expressing all outcomes in monetary values, but they can also make non-monetized judgment calls (perhaps represented by numeric weights) based on personal or shared values, such as GIIRS ratings. The analyst values impacts in a manner that’s consistent with her own or her clients’ priorities.

Analysts don’t do social impact measurement; they compare reported social impact information. It is surprising that the techniques to skillfully interpret and compare reporting are so underdeveloped, particularly in comparison to the effort given to measuring impacts. The fact is that most people are actually more likely to read impact reports than they are to measure impact. Focusing on a measurement solution leaves these folks with the problematic assumption that if they are having a tough time interpreting and comparing impact reports, they should implement a (new) common measurement system.

We need better-quality information and valuations, and reduced reporting burden.

Cultivating more skilled analysts would reduce the overall reporting burden. Mission-driven organizations could create a single, general-purpose report for analysts to interpret for their own purposes, and analysts could demand that organizations use measurement approaches that are the most informative, not necessarily the most widely used. Thus, social impact data coming out of organizations would be more meaningful and valuable.

Better yet, analysts would allow pluralistic valuations to thrive. Because we can simplify the complexity of social impact reporting only with reference to moral values, it is crucial that we give voice to different valuations of the same output and outcome data. Social capital markets are better served if many different analysts do valuation than if organizations value their own work. Because different people with different values will assess the relative worth of social outcomes differently, it’s appropriate that the same “objective” impacts would and should be valued differently by different consumers, investors, and donors. Analysts could help by disentangling “measuring” from “valuing.” Some so-called impact measurement approaches, such as SROI, might make more sense as analyst tools than as measurement tools.

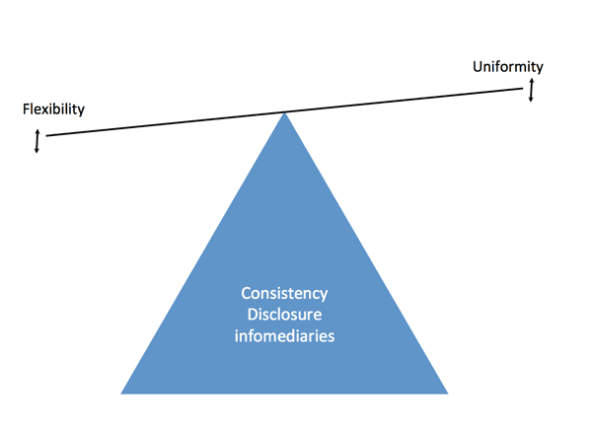

Analysts are infomediaries. In fields where data interpretation is complicated—such as financial accounting, medicine, and weather—experts consume technical information, make nuanced judgments, and distill important points for nonexperts (for example, buy-sell recommendations, a prescription, or a cloud icon). Different experts reach different opinions. Impact-focused investors, donors, and consumers will find experts they trust to inform their decision-making (and perhaps also consider the degree of expert consensus).

Financial accounting manages an ever-shifting balancing act between uniformity and flexibility. Consistency, disclosure, and intermediaries are crucial to sustaining the balancing act.

Financial accounting manages an ever-shifting balancing act between uniformity and flexibility. Consistency, disclosure, and intermediaries are crucial to sustaining the balancing act.

Measurement matters, but social capital markets need bounded flexibility in measurement, not total flexibility nor total uniformity. Articulating well-defined measurement techniques is crucial, and we need to encourage organizations to be consistent from year to year with definitions and approaches (funders need to not ask for changes!). Reports need to shift from promotion to methodical documentation. The keystone needed to support all of these advancements is a cadre of skilled analysts who can assess reported impact without influencing the measures themselves.

Creating this pool of social impact analysts will not be easy. We must learn from analysts already at work, empirically test their approaches, and then collect, organize, and teach best practices in business, policy, and law schools. Fortunately, the formation of this new profession is already well underway.

1 The following articles from 1965 offer a good overview of the uniformity-relevance debate in accounting: Accounting Uniformity in the Regulated Industries by Price, Walker and Spacek; Putting Uniformity in Financial Accounting in Perspective by Powell; Uniformity versus Flexibility: A review of the rhetoric by Keller.

Editor's note: This article develops ideas that were first published in Ruff, K “The Role of Intermediaries in Social Accounting: Insights from effective transparency systems” in Mook, L. (Ed.) Accounting for Social Value. University of Toronto Press, 2013.

Support SSIR’s coverage of cross-sector solutions to global challenges.

Help us further the reach of innovative ideas. Donate today.

Read more stories by Kate Ruff & Sara Olsen.