Social purpose organizations need better ways to determine the quality of the evidence they use for making decisions. They need the strongest possible evidence they can find to plan effective action, demonstrate the value of their activities to funders and stakeholders, and influence public policy.

Yet funders, policy makers, and managers face competing defintiions of what constitutes quality evidence. And while using evidence of any kind to make decisions is better than using no evidence, existing definitions may cause leaders to overlook valuable information that would improve their chances of making truly effective decisions.

Many in the field are calling for higher standards for evidence, or “stronger evidence.” Prevailing evidence hierarchies include the US Department of Education’s What Works Clearinghouse and the US Department of Health and Human Services’ Teen Pregnancy Evidence Review. But those focus on finding good supporting evidence for whole programs, policies, or products. They don’t consider how well evidence addresses the many other questions important to those who want to improve existing programs or create new social initiatives.

This is an important consideration, because the best approach usually isn’t to replicate a whole program. Instead, local innovation is usually more beneficial. For example, consider an existing organization in a small city that helps low-income older adults live longer lives at home. Rather than dismantle their existing program and attempt to implement a program originating in a large urban area, they are more likely to innovate a new model of care that meets the unique needs of their community. For a successful innovation, they would need to consider multiple services and practices, the organizations and people involved, the operating environment, and what it takes to support lasting change in people’s lives. For that, they need to draw on evidence from many types of studies and sources.

Are you enjoying this article? Read more like this, plus SSIR's full archive of content, when you subscribe.

One resource for assessing any type of study is the transdisciplinary research quality assessment framework, which considers many aspects of study quality, such as whether the researchers explicitly account for limitations, whether the findings are transferable to other contexts, and whether the researchers disclose their funding sources and other potential biases. Other resources provide guidance for assessing evidence from specific types of studies. This checklist, for example, focuses on assessing the strengths and weaknesses of a systematic review—a study that finds and synthesizes information from multiple studies as they relate to the questions at hand.

However, these kinds of evaluations are time and labor intensive. Many organizations don’t have the expertise or funding needed for detailed analysis of every study. More important, for most practical purposes, social sector leaders need more than long lists of individual studies. They want a clear, scientific way to see where their evidence is strong (supporting high confidence in decisions) and where they need more information before committing scarce resources.

In our work providing research support to health and human service organizations at Meaningful Evidence, LLC, we have developed a way to assess research that can help leaders more easily find the best evidence to support their decisions. To illustrate how it works, we’ll use an example of a foundation that wants to develop a strong evidence base to inform activities around helping young homeless women in Seattle, Washington.

Step One: Map Your Evidence

The goal of this step is to combine evidence from multiple sources, and plot it on a map to gain a more complete and relational picture of available information.

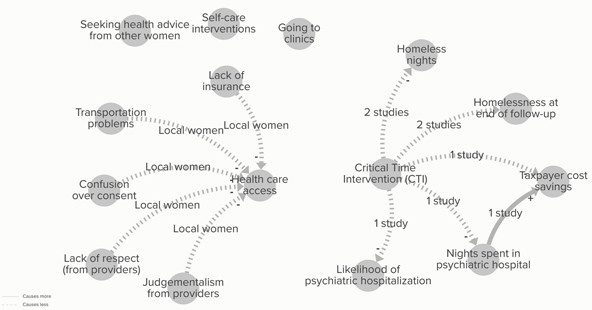

As the foundation begins to collect existing evidence, it comes across the Coalition for Evidence-Based Policy website. The site lists one evidence-supported program on housing and homelessness, the Critical Time Intervention (CTI), which shows sizable results in two well-conducted, randomized controlled trials (RCTs), both based in New York City. Both studies show that the CTI program reduced homelessness rates and duration. One of the studies reports taxpayer cost savings. The other reports a reduction in nights participants spent in a psychiatric hospital, which led to taxpayer cost savings. That study also found CTI participants had lower rates of psychiatric hospitalization compared to those who were randomly assigned to the control group.

The foundation then interviews a sample of young homeless women in Seattle to better understand their perspectives, and compiles this data in a report like this one. Say that, as in that study, homeless women explain they seek health advice from other women, try self-care interventions, and go to clinics. They also discuss several barriers they face in accessing health care: lack of insurance, confusing systems for giving consent for care at hospitals or clinics, transportation problems, and lack of respect from providers.

Using the mapping approach I described in a previous article, the foundation can plot out the data, as above (see an interactive version here). The map contains 15 elements (circles) and 11 causal relationships (arrows).

Step Two: Rate Your Evidence

The rating technique we developed builds on our consulting experience, as well as a paper by Steve Wallis (co-author of this article). Based on the work of philosopher Karl Popper and an innovative research stream, this technique allows leaders to assess evidence from research across methods and disciplines, stakeholder insight, thier own outcomes measurement, and any other evidence source.

Unlike evidence hierarchies that rate the evidence for a whole program, the approach rates available evidence based on the number of conceptual elements (such as lack of insurance or homeless nights) and causal relationships (such as whether transportation problems are a barrier to health care access). That way, leaders can see where their evidence is strong and where they need more information.

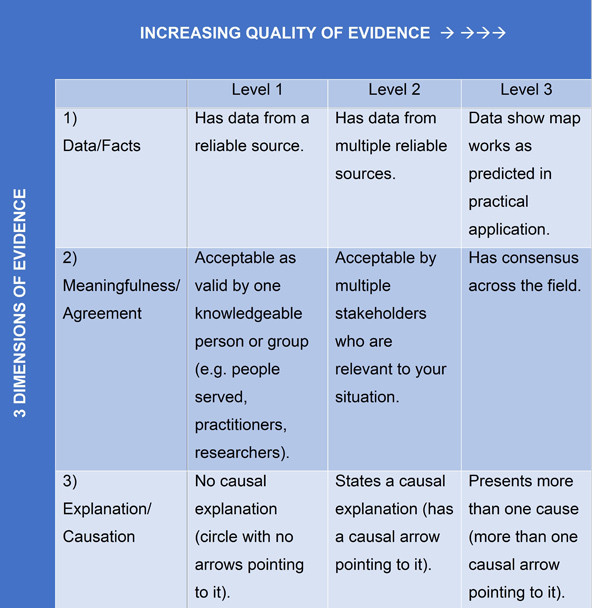

The table below shows an evidence appraisal matrix we created to help leaders more reliably ascertain whether their evidence is sufficient. The matrix considers three dimensions of evidence—data/facts, meaningfulness/agreement, and explanation/causation. These dimensions are important for deciding whether there is enough evidence to find and choose options that provide the greatest certainty of success compared with the alternative options.

Here is a breakdown of each dimension:

1. Data/Facts

When we’re making evidence-based decisions, we want to make sure that our evidence is factual. As researcher Patrick Lester puts it, we need to be able to distinguish good evidence from “fads, ideology, and politics.”

In the case of our example, eleven elements (all except homeless nights, homelessness, CTI, and taxpayer cost saving) and nine causal relationships have only one supporting data source. We see those as “level 1,” or more-tenuous, data/facts. To increase certainty in this information, the foundation might collect more related research and explore whether other sources confirm it, such as whether other research shows that lack of insurance is a barrier to health care access.

Two of the causal relationships and four of the elements (homeless nights, homelessness, CTI, and taxpayer cost saving) are based on evidence from two studies; these therefore get a “level two” data/facts rating. The foundation can have more confidence in these data—and the decisions it bases on them.

None of the items on our map gets the highest rating of “level three” data/facts. To reach that level, we’d want to see results from practical application in any given situation. For example, the foundation might help local providers reduce confusion over consent and measure whether that improves access to health care. If the results match the expectations of the map, we know we have good data.

2. Meaningfulness/Agreement

When leaders are focused on meeting the needs of their community, even seemingly solid solid facts aren’t enough. They need to understand the views of local people.

In our example, all items on the map get a “level 1” rating in this dimension, because their evidence comes from only one group of stakeholders (homeless women or researchers).

To bring this rating to “level 2,” the foundation might interview a variety of other stakeholders, such as local health care professionals, caseworkers, and government officials.

It might also convene an advisory group to get the perspectives of a wider range of local and national stakeholders and experts. That would help move the map toward a consensus in the field and a “level 3” rating.

3. Explanation/Causation

For an evidence map to be useful for organizations addressing big issues, it needs to include more than a laundry list of facts and viewpoints. Leaders need to understand the inter-connected causes and effects of the complex social problems they face.

Back to the example map, nine elements have no causal arrows pointing to them. So, the foundation should rate them as “level 1.” Four elements have one causal arrow, lifting them to “level 2.”

To improve these ratings, the organization might gather more studies to find missing explanations. It might discover new causal connections between points on the map. For example, improved health care access might decrease the likelihood of psychiatric hospitalization.

Two elements in this case (health care access and taxpayer cost savings) rate as “level 3” in this dimension. Decisions made around these areas will more likely lead to reliable results.

Ultimately, the closer your evidence is to “level 3” in all three dimensions, the greater your organization’s ability will be to make successful decisions and the more easily your organization will be able to demonstrate the benefits of its activities to stakeholders.

In our work, these techniques have helped managers and policy strategists quickly assess the strengths and weaknesses of their knowledge base. With this method, you can more easily see where you have enough evidence to make a successful decision and where you might need more research—and how to focus that research to get the most bang for your research buck. This opens an exciting path for organizations to work with greater efficiency, better address significant social problems, and make a greater impact in their communities.

Support SSIR’s coverage of cross-sector solutions to global challenges.

Help us further the reach of innovative ideas. Donate today.

Read more stories by Steven E. Wallis & Bernadette Wright.