(Illustration by Hugo Herrera)

(Illustration by Hugo Herrera)

In August 2022, a company called AI Insights issued a huge request for services on Amazon Mechanical Turk, an Amazon-owned marketplace where a globally crowdsourced pool of individual data workers can accept small digital tasks for pay. What those tasks are can vary widely: taking surveys, labeling objects in images, annotating data, repeating words aloud, or doing research. Many of the “Human Intelligence Tasks” (HITs) listed on Mechanical Turk are used to train artificial intelligence programs to better recognize information. The compensation also varies widely, starting at just a few cents per task, which means that actually making a living through Mechanical Turk requires working in volume.

And so, last year, when AI Insights posted a request for more than 70,000 HITs during what is typically a slow season on the platform, it represented a bonanza of opportunity for “Turkers,” as Mechanical Turk’s workers call themselves. But as they got to work, in some cases completing hundreds of HITs, the Turkers soon realized that AI Insights was rejecting all of their work en masse, without explanation. According to the platform’s guidelines, that meant the Turkers wouldn’t be paid, but that AI Insights would get to keep their work all the same. Also, since individual Turkers’ approval ratings are affected anytime their work is rejected—and since most requesters on the site won’t accept bids from Turkers with less than a 99% approval rating—the mass rejection also sent many Turkers’ ratings tumbling downward, effectively blacklisting them through no fault of their own. When Turkers contacted Amazon, asking them to intervene, the tech giant washed its hands of the situation, saying they can’t “get involved in disputes between workers and requesters.”

The fiasco represented one large example of how things can go wrong in the shadowy world of “ghost work”: a term coined by authors Mary Gray and Siddharth Suri, in their 2019 book Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass, to describe the largely invisible workforce whose labor is actually powering what we call “artificial” intelligence.

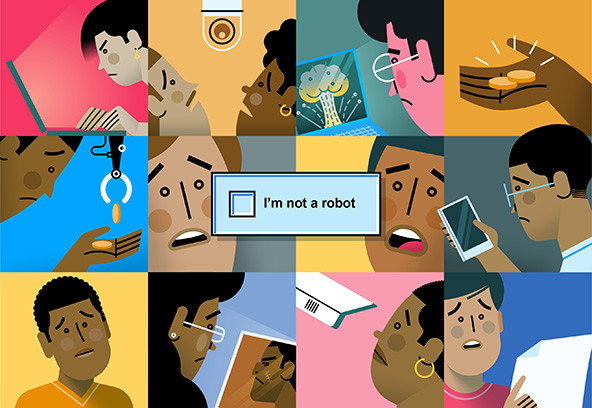

Although tech companies tend to obscure the fact, and the public rarely realizes it, artificial intelligence (AI) systems are not the purely automated processes tech companies claim they are, but rather the product of human work. Some of that work is performed by everyday people using the internet when we do things like fill out “CAPTCHA” puzzles in order to access a website. But most of it is performed by a globally dispersed army of data workers who are paid pennies and have little recourse when they’re mistreated.

While platforms like Mechanical Turk advertise themselves with a promise of ultimate flexibility—an appealing option for many people, including those juggling medical issues or family obligations—the data workers performing their assignments do so under a profound power imbalance. On Mechanical Turk, for example, workers are held to strict rules of accountability and identity verification, while requesters can be completely anonymous; that means if unscrupulous requesters gain a bad reputation for treating workers unfairly, they can simply reappear on the platform under a new name. (In fact, AI Insights did exactly this after mass rejecting Turkers’ work.) And since data workers are almost invariably contract workers, rather than employees, they’re not covered by labor protections against things like AI Insights’ wage theft.

They’re also paid differently based on where they’re located—a significant factor given Mechanical Turk contracts laborers in nearly 200 countries and much of U.S. companies’ micro-work is outsourced to Africa and Asia. In the U.S., data workers typically make little above minimum wage for what is painstaking and repetitive work; workers in the Global South may take home less than $1.50 per hour. In some countries, Amazon pays workers not in actual money but in Amazon gift cards.

The tasks data workers perform can also be psychologically scarring. One of us has been a “Turker” for more than seven years, and several years ago received a HIT to annotate images that ended up being graphic depictions of suicide. There was no content warning ahead of time, no HR follow-up offer of counseling; there wasn’t even the rote inclusion of a suicide hotline for workers triggered by the task. In another instance, I was assigned a HIT of labeling aerial photographs of non-traditional border crossings—following tire tracks and footpaths in an unidentified desert—without explanation of what I was seeing or how my work would be used. All I could think of afterward was the people who’d made those tracks, and what my work had contributed to: training AI systems to locate refugees fleeing violence and poverty in order to help them, or to capture and detain them?

Workers in poorer countries fare even worse. In Kenya, the large data contracting company Samaforce, best known for its role in providing contract content moderation for Facebook, also provided AI training services for the U.S. corporation OpenAI, the creator of ChatGPT. This year, Time reported that Sama had paid workers in Kenya a take-home wage as low as $1.32 per hour to review tens of thousands of passages of toxic text, containing hate speech and detailed descriptions of murder, rape, and child sex abuse. The purpose was to train ChatGPT to detect and filter out such language in its outputs, making the AI tool safer for consumers—and more profitable for its creators. But while AI companies are raking in billions, the hidden laborers perfecting its products—the real ghosts in the machine—are left with trauma.

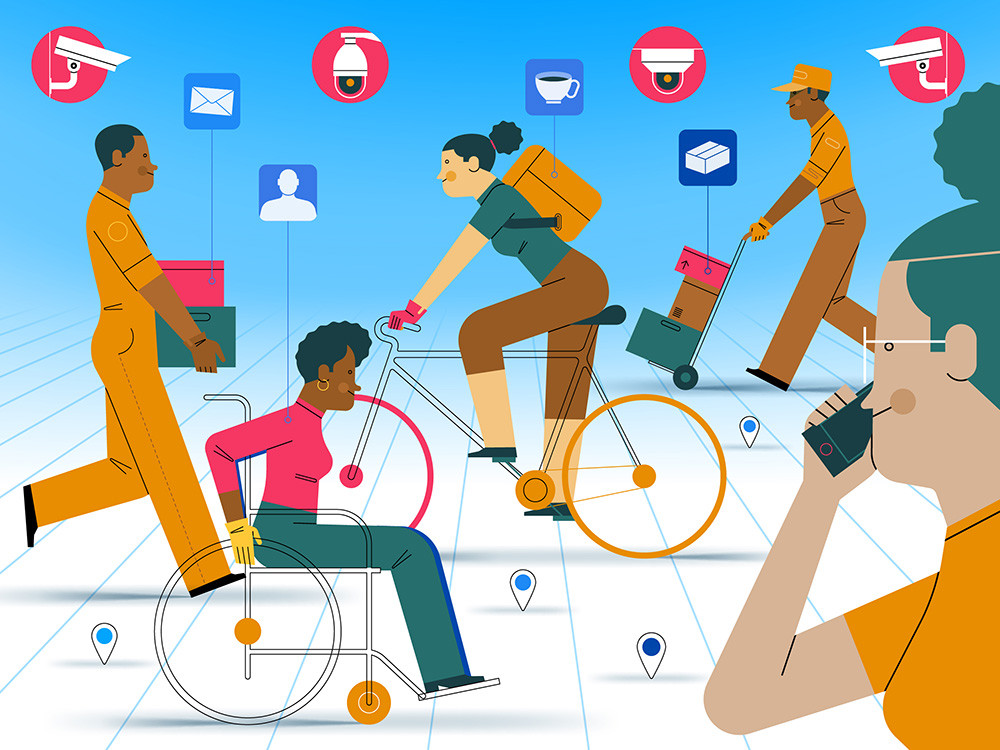

But the problems with how the AI sausage is being made aren’t the only issues with artificial intelligence. In one way or another, AI will change the way we work, and is already affecting workers worldwide in numerous ways. It’s there in the ways workers today are hired and fired by algorithmic systems with little ability to recognize human context. It’s there in the constant surveillance many workers labor under, invading their privacy and forcing them into punishing production quotas that risk their safety and health. It’s there also in the workers whose daily tasks are dictated by AI, whether or not that direction makes sense, and in how the same workers are being used as data points, often without their knowledge or consent. And, of course, it’s there in the way that AI threatens to make workers’ jobs more precarious by overpromising low-cost replacements and limiting their bargaining power.

For decades, workers around the world have seen good jobs disappear due to automation. The threat is broad, targeting white-collar jobs, such as clerical work, graphic design, and technical writing, long-believed immune but now at risk thanks to AI systems like ChatGPT.

The specter of AI-fueled mass unemployment isn’t even something tech companies deny; earlier this year, OpenAI’s CEO Sam Altman declared, “Jobs are definitely going to go away, full stop.”

It’s alarming how many companies don’t seem to realize the cascading effects the AI revolution may bring. It’s not just the people whose jobs disappear who will suffer, but everyone downstream from them too: the businesses where they’d have shopped; the schools, libraries and fire departments their taxes would have funded; the entire communities that could turn into blighted shells as millions of people lose work and have no prospects to replace it. There are also the less quantifiable tolls on a community when its members enter fast food restaurants, pharmacies, and grocery stores and don’t encounter a single human employee. The mental health toll from the isolation many people experienced during the COVID-19 pandemic is a preview of what that might mean.

It can be hard to see an upside to AI technology, and often the only people who seem to be excited about it are the tech companies profiting massively from its rollout. Right now, workers are telling us that people are being harmed by AI, and in surveys, large majorities of people say they’re worried about its implications. But few of us know what to do about it.

In truth, the problem is so big that it can’t be solved by any one sector alone, and there are no overnight fixes.

The challenge is only made steeper by decisions like Citizens United, where virtually unlimited corporate campaign contributions stack the deck against the needs of working people and grassroots movements. This is true across the spectrum, especially as it relates to the ways technology increasingly impacts and degrades work.

There are companies opting out of AI for various reasons—both on principle and for business considerations. But there is more that companies of all kinds can do, starting with committing to a standard of transparency on how they will use AI, and giving both workers and consumers a meaningful choice to understand and opt out of data collection.

Workers finding ways to band together is also imperative. The nature of digitized, globally dispersed work is to separate workers, and the prospect of unionizing hundreds of thousands of micro-workers across some 200 countries, is, at this point, unfeasible. But workers can nonetheless find ways of connecting to protect their rights. Over the last few years, a web forum initially designed to help Mechanical Turk contractors share tips and warnings, Turkopticon, has morphed into a large worker advocacy group that makes collective demands of Amazon to treat Turkers better. In 2021, workers at Appen—another crowdsourced data firm whose global workforce provides feedback to train AI—began to organize with the same union that represents Google employees.

Tech companies don’t like this. Turkopticon has seen Amazon officials try to infiltrate almost all of its worker forums, and six Appen micro-workers were abruptly terminated this year in what seemed like clear retaliation for their organizing efforts. But workers united in defense of their rights have far more power than individual “ghost workers” alone, and the Alphabet Workers Union announced a few weeks later that the fired Appen workers had been reinstated with back pay.

We need national and international labor organizations to be willing to sanction companies when workers file unfair labor practices complaints, and for sectoral bargaining to cover crowd and gig workers in countries that allow it.

At the highest level, though, we need policy solutions. We need thoughtful, well-informed politicians who are willing to tell tech companies no, and to reward those businesses that do right by their workers, through approaches like tax incentives for corporations that refuse to replace workers with AI. We also need our leaders to seriously engage with ideas like universal basic income (UBI), to help prepare for the massive changes in our economy that may come anyway, as millions of people find themselves out of work but still very much in need of food and shelter—but not at the expense, as some UBI advocates in the tech sector now suggest, of cutting existing social protection programs.

The AI technology revolution is not inevitable, and with solidarity and awareness of its threats, we can at least meet it on our feet.

Good things happen when workers are given a voice in workplace design choices. Listen to the discussion from SSIR’s 2023 Data on Purpose conference:

Support SSIR’s coverage of cross-sector solutions to global challenges.

Help us further the reach of innovative ideas. Donate today.

Read more stories by Krystal Kauffman & Adrienne Williams.